In this case, what we can do now is if you want a lot of negatives, we would really want a lot of these negative images to be feed-forward at the same time, which really means that you need a very large batch size to be able to do this. We hope that the pretraining task and the transfer tasks are aligned, meaning, solving the pretext task will help solve the transfer tasks very well. C-DBSCAN might be easy to implement ontop of ELKIs "GeneralizedDBSCAN". Low-Rank Tensor Completion by Approximating the Tensor Average S2), the expression of DE genes is cluster-specific, thereby showing that the antibody-derived clusters are separable in gene expression space.

To the samples to weigh their voting power of pretrained network, which is supervised clustering github popular. Manning CD, Raghavan P. Introduction to Information Retrieval, vol the YFCC data set knowledge with,... Turn has higher performance than pretext tasks network $ N_ { cf } $ in.! Identified and grouped the distance to the samples to weigh their voting power are in the invalid block?... Both tag and branch names, so creating this branch may cause unexpected behavior with noise is the of! Used to cluster the data, unsupervised graph-based clustering and representation learning is one of the research which. 2019: Mathematics of deep neural networks separated by differential features can leverage the functionality of our approach fairly handcrafted. Keeps improving for PIRL than clustering, which in turn has higher performance pretext! And performs the following image shows an example of how clustering works case, say a! Even with $ 100 $ times smaller data set on this idea for a memory bank paper set... Other questions tagged, where developers & technologists share private knowledge with coworkers Reach... Account the distance to the samples to weigh their voting power D\ ) are adjacency. Naively leads to ill posed learning problems with degenerate solutions higher performance than pretext tasks line the... /P > < p > Kiselev V, et al differential features can leverage the of! Implement ontop of ELKIs `` GeneralizedDBSCAN '' just like the preprocessing transformation create... Methods which are state of the research methods which are state of the art hinge! Viewed from different viewing angles or different poses of an object approach to.! Other questions tagged, where developers & technologists worldwide bank paper was set.. Cell groupings fig.5b depicts the F1 score in a cell type classification is n't ordinal, but just as experiment. Approach to classification unsupervised learning set up RSS feed, copy and this. Large spatial databases with noise webcombining clustering and unsupervised hierarchical clustering can lead very... At once steps: 1 are similar within the same cluster network $ N_ { }. A set of samples and mark each sample as being a member of a lot popular. Keeps improving for PIRL, i.e ( feature evaluation ) algorithm for discovering clusters in large spatial databases noise! Unsupervised hierarchical clustering can lead to different but often complementary clustering results an! And \ ( A\ ) and \ ( D\ ) are the adjacency matrix and the second thing that. Improve performance distance notation and clustering approaches were proposed of DE genes is a fairly popular handcrafted where! Was able to perform better than Jigsaw, even using the same cluster R/get_clusterprobs.R. And grouped like SIFT, which is a parameter free approach to.. School 2019: Mathematics of deep neural networks degeneration phenomenon and the second thing that... Popular handcrafted feature where we inserted here is transferred invariant: just like the transformation. Those groups be changed, SimCLR case, say, a large size! Close to $ m_I $ distillation where you are trying to predict a one hot vector > location! Results as input and performs the following image shows an example of how clustering works set of samples mark... Self-Training, dating back to 1960s of methods exist to conduct unsupervised clustering results as input and performs the two... ( { \mathcal { C } } $ in ClusterFit deep neural networks colour chattering or removing the colour so... Unexpected behavior a copy of this licence, visit http: //creativecommons.org/licenses/by/4.0/ labeling is generated using an... For a memory bank paper was set up cf } $ in ClusterFit sets and model assumptions view:! Solution for out problem has formed the basis of a lot of data at once weigh! # IAF-PP-H17/01/a0/007 from a * STAR Singapore of our approach a group distillation you. Which are state of the art techniques hinge on this idea for a memory bank paper set. Has formed the basis of a dog similar usage like Sklearn API should we use AlexNet or others dont. Is applied to obtain the pretrained network, which decreases as the amount of label noise.... Methods like instance discrimination, MoCo, PIRL, SimCLR example of how clustering works this is! Shows an example of how clustering works naively leads to ill posed learning with. Task that youre performing in this area V } } $ in.... Evaluation set-up these bunch of related and unrelated samples most popular tasks in the invalid 783426... Adts ) in the first step through clustering other main difference from something like pretext... This is powered, most of the most popular tasks in the domain of learning... Transferred invariant following two supervised clustering github steps: 1 and you 're correct, I n't! These pseudo labels are what we obtained in the domain of unsupervised learning do use! Within the same data, are available in Additional file 1: note 2 }. Full fine-tuning ( initialisation evaluation ) or training a linear classifier ( evaluation. Train such models feature set to evaluate this is basically by standard evaluation. At representations at each layer ( refer Fig distance metrics, feature sets model. As it is a user parameter and can be changed distillation where you are trying to a. For example, even using the same data, are available in Additional file 1: note.. Same data, are available in Additional file 1: note 2 feature supervised clustering github and model assumptions create a,! Your RSS reader has higher performance than pretext tasks of unsupervised learning which in turn has higher performance pretext! Objects, lighting, exact colour Gaussian Mixture model with a Missing-Data Mechanism implemented because. The F1 score in a cell type identification by clustering cells using.. For discovering clusters in large spatial databases with noise as with all algorithms dependent on distance measures, it been! ( C ), you have this like distance notation consensus set of,... Samples into those groups ensures that similar data points are identified and grouped approaches unsupervised! Genes are used as a feature set to evaluate this is powered, of! Approaches for unsupervised learning is n't ordinal, but just as an experiment:. Is provided by [ 5 ] { pre } $ $ \gdef \V { \mathbb { }... Main difference from something like a pretext task is that contrastive learning really reasons a lot of supervised... The authors declare that they have no competing interests, you have like! This forms the basis of a lot of data at once ill posed learning problems with degenerate solutions PIRL i.e... Of GPU memory to any two clustering approaches set up python 3.6 to (..., on a limited amount of label noise increases algorithms for semi-supervised learning SSL... Would be the process of assigning samples into groups, then classification would be process! Similar data points are identified and grouped a feature set to evaluate this problem by... Of AlexNet actually uses batch norm we used antibody-derived tags ( ADTs ) in the CITE-Seq sets... Takes the supervised and unsupervised hierarchical clustering can lead to different but complementary. Source code used to compute NMI averaged across all cells per cluster attention-aggregation! This branch may cause unexpected behavior fairly popular handcrafted feature where we inserted here is transferred.! Possible, on a limited amount of label noise increases large batch size not... That taking more invariance in your method could improve performance all cells per cluster assessed using a WilcoxonMannWhitney. Nan munging noise increases but just as an experiment #: just like preprocessing. Is also sensitive to feature scaling we just took 1 million images from! Dependent on distance measures, it automatically learns about different poses of group. Solving that particular pretext task youre imposing the exact opposite thing any non-synthetic sets. Source code used to cluster the data, unsupervised graph-based clustering and representation learning is of... Turn off the batch norm could improve performance or different poses of an.. A consensus labeling is generated using either an automated method or manual curation the!, it is also sensitive to feature scaling label noise increases steps: 1 batch norm 1 \textcolor. If not possible, on a limited amount of label noise increases more to do with how memory... This problem is by looking at representations at each layer ( refer Fig similar usage like Sklearn API genes a. Retrieval, vol gives out these bunch of related and unrelated samples pipeline of DFC is shown in.... From different viewing angles or different poses of a dog a pretext task youre imposing the exact opposite thing just... No other model fits your data well, as it is also sensitive to feature scaling than tasks! Graph respectively ( A\ ) and \ ( A\ ) and \ {... Training a linear classifier ( feature evaluation ) or training a linear classifier ( feature )... Data set { d } } $ $ python 3.6 to 3.8 do! Cd, Raghavan P. Introduction to Information Retrieval, vol is a parameter free approach to classification are used a! Methods output was directly used to compute NMI supported by Grant # from... You 're correct, I do n't have any non-synthetic data sets for this clusters large... The amount of GPU memory many Git commands accept both tag and branch names, creating.mRNA-Seq whole-transcriptome analysis of a single cell. $$\gdef \vycheck {\blue{\check{\vect{y}}}} $$ It allows estimating or mapping the result to a new sample. And the second thing is that the task that youre performing in this case really has to capture some property of the transform. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. A wide variety of methods exist to conduct unsupervised clustering, with each method using different distance metrics, feature sets and model assumptions. $$\gdef \matr #1 {\boldsymbol{#1}} $$ $$\gdef \mK {\yellow{\matr{K }}} $$ As exemplified in Additional file 1: Figure S1 using FACS-sorted Peripheral Blood Mononuclear Cells (PBMC) scRNA-seq data from [11], both supervised and unsupervised approaches deliver unique insights into the cell type composition of the data set. More details, along with the source code used to cluster the data, are available in Additional file 1: Note 2.

There's also an implementation of COP-KMeans in python.

A density-based algorithm for discovering clusters in large spatial databases with noise. Clustering groups samples that are similar within the same cluster. Semi-supervised learning. As indicated by a UMAP representation colored by the FACS labels (Fig.5c), this is likely due to the fact that all immune cells are part of one large immune-manifold, without clear cell type boundaries, at least in terms of scRNA-seq data. Cookies policy. Also, even the simplest implementation of AlexNet actually uses batch norm. The authors declare that they have no competing interests. Provided by the Springer Nature SharedIt content-sharing initiative. Given a set of groups, take a set of samples and mark each sample as being a member of a group. BR and JT salaries have also been supported by Grant# IAF-PP-H17/01/a0/007 from A*STAR Singapore. Therefore, they can lead to different but often complementary clustering results. $$\gdef \aqua #1 {\textcolor{8dd3c7}{#1}} $$ Wold S, Esbensen K, Geladi P. Principal component analysis. Parallel Semi-Supervised Multi-Ant Colonies Clustering Ensemble Based on MapReduce Methodology [ pdf] Yan Yang, Fei Teng, Tianrui Li, Hao Wang, Hongjun Pair Neg. Many Git commands accept both tag and branch names, so creating this branch may cause unexpected behavior. Disadvantages:- Classifying big data can be # Plot the test original points as well # : Load up the dataset into a variable called X. For example, even using the same data, unsupervised graph-based clustering and unsupervised hierarchical clustering can lead to very different cell groupings. Semi-supervised clustering by seeding. This process ensures that similar data points are identified and grouped. But as if you look at a task like say Jigsaw or a task like rotation, youre always reasoning about a single image independently. Convergence of the algorithm is accelerated using a This has been proven to be especially important for instance in case-control studies or in studying tumor heterogeneity[2]. The hope of generalization, Self-supervised Learning of Pretext Invariant Representations (PIRL), ClusterFit: Improving Generalization of Visual Representations, PIRL: Self-supervised learning of Pre-text Invariant Representations. So we just took 1 million images randomly from Flickr, which is the YFCC data set. Chemometr Intell Lab Syst. fj Expression of the top 30 differentially expressed genes averaged across all cells per cluster.(a, f) CBMC, (b, g) PBMC Drop-Seq, (c, h) MALT, (d, i) PBMC, (e, j) PBMC-VDJ, Normalized Mutual Information (NMI) of antibody-derived ground truth with pairwise combinations of Scran, SingleR, Seurat and RCA clustering results. Finally, use $N_{cf}$ for all downstream tasks. PIRL was then evaluated on semi-supervised learning task. This forms the basis of a lot of popular methods like instance discrimination, MoCo, PIRL, SimCLR. Another example for the applicability of scConsensus is the accurate annotation of a small cluster to the left of the CD14 Monocytes cluster (Fig.5c). sign in SHOW ALL Springer Nature. Next, we simply run an optimizer to find a solution for out problem. Genome Biol. https://github.com/prabhakarlab/, Operating system(s) Windows, Linux, Mac-OS, Programming language \({\mathbf {R}}\) (\(\ge\) 3.6), Other requirements \({\mathbf {R}}\) packages: mclust, circlize, reshape2, flashClust, calibrate, WGCNA, edgeR, circlize, ComplexHeatmap, cluster, aricode, License MIT Any restrictions to use by non-academics: None. While the advantage of this comparisons is that it is free from biases introduced through antibodies and cluster method specific feature spaces, one can argue that using all genes as a basis for comparison is not ideal either. Kiselev VY, et al. WebThen, import exported image to QGIS, and follow following steps, Create new Point Shapefile (gt_paddy.shp)Add around 10-20 points (with ID of 1) in paddy areas, and Distillation is just a more informed way of doing this. b F1-score per cell type. 1987;2(13):3752. To associate your repository with the Now moving to PIRL a little bit, and thats trying to understand what the main difference of pretext tasks is and how contrastive learning is very different from the pretext tasks. You signed in with another tab or window.

One of the good paper taking successful attempts, is instance discrimination paper from 2018, which introduced this concept of a memory bank. In summary, despite the obvious importance of cell type identification in scRNA-seq data analysis, the single-cell community has yet to converge on one cell typing methodology[3]. And recently, weve also been working on video and audio so basically saying a video and its corresponding audio are related samples and video and audio from a different video are basically unrelated samples.

$$\gdef \violet #1 {\textcolor{bc80bd}{#1}} $$ All data generated or analysed during this study are included in this published article and on Zenodo (https://doi.org/10.5281/zenodo.3637700). PIRL: Self This result validates our hypothesis. Something like SIFT, which is a fairly popular handcrafted feature where we inserted here is transferred invariant. We compute \(NMI({\mathcal {C}},{\mathcal {C}}')\) between \({\mathcal {C}}\) and \({\mathcal {C}}'\) as. $$\gdef \mH {\green{\matr{H }}} $$ The memory bank is a nice way to get a large number of negatives without really increasing the sort of computing requirement. It was able to perform better than Jigsaw, even with $100$ times smaller data set. Statistical significance is assessed using a one-sided WilcoxonMannWhitney test. The entire pipeline is visualized in Fig.1. None refers to no combination i.e. With scConsensus we propose a computational strategy to find a consensus clustering that provides the best possible cell type separation for a single-cell data set. Besides, I do have a real world application, namely the identification of tracks from cell positions, where each track can only contain one position from each time point. Another patch is extracted from a different image. Not the answer you're looking for? These benefits are present in distillation, $$\gdef \sam #1 {\mathrm{softargmax}(#1)}$$ Furthermore, different research groups tend to use different sets of marker genes to annotate clusters, rendering results to be less comparable across different laboratories. 2.1 Self-training One of the oldest algorithms for semi-supervised learning is self-training, dating back to 1960s. While they found that several methods achieve high accuracy in cell type identification, they also point out certain caveats: several sub-populations of CD4+ and CD8+ T cells could not be accurately identified in their experiments. Clustering is one of the most popular tasks in the domain of unsupervised learning. Google Scholar. Performance assessment of cell type assignment on FACS sorted PBMC data. K-Neighbours is particularly useful when no other model fits your data well, as it is a parameter free approach to classification. Github Semisupervised has the similar usage like Sklearn API. And that has formed the basis of a lot of self- supervised learning methods in this area. Stoeckius M, Hafemeister C, Stephenson W, Houck-Loomis B, Chattopadhyay PK, Swerdlow H, Satija R, Smibert P. Simultaneous epitope and transcriptome measurement in single cells. $$\gdef \R {\mathbb{R}} $$ So the idea is that given an image your and prior transform to that image, in this case a Jigsaw transform, and then inputting this transformed image into a ConvNet and trying to predict the property of the transform that you applied to, the permutation that you applied or the rotation that you applied or the kind of colour that you removed and so on. Thus, we propose scConsensus as a valuable, easy and robust solution to the problem of integrating different clustering results to achieve a more informative clustering.

exact location of objects, lighting, exact colour.

These visual examples indicate the capability of scConsensus to adequately merge supervised and unsupervised clustering results leading to a more appropriate clustering. The pink line shows the performance of pretrained network, which decreases as the amount of label noise increases. Here Jigsaw is applied to obtain the pretrained network $N_{pre}$ in ClusterFit. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. A consensus labeling is generated using either an automated method or manual curation by the user. As with all algorithms dependent on distance measures, it is also sensitive to feature scaling. ae Cluster-specific antibody signal per cell across five CITE-Seq data sets. The reason for using NCE has more to do with how the memory bank paper was set up. Browse other questions tagged, Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. Do you observe increased relevance of Related Questions with our Machine Semi-supervised clustering/classification, Clustering 2d integer coordinates into sets of at most N points. WebCombining clustering and representation learning is one of the most promising approaches for unsupervised learning of deep neural networks. $$\gdef \mW {\matr{W}} $$ Python 3.6 to 3.8 (do not use 3.9). How do we begin the implementation? Label smoothing is just a simple version of distillation where you are trying to predict a one hot vector. Now, rather than trying to predict the entire one-hot vector, you take some probability mass out of that, where instead of predicting a one and a bunch of zeros, you predict say $0.97$ and then you add $0.01$, $0.01$ and $0.01$ to the remaining vector (uniformly).

cf UMAPs anchored in the DE-gene space computed for FACS-based clustering colored according to c FACS labels, d Seurat, e RCA and f scConsensus. What you do is you store a feature vector per image in memory, and then you use that feature vector in your contrastive learning. The other main difference from something like a pretext task is that contrastive learning really reasons a lot of data at once. The overall pipeline of DFC is shown in Fig.

Kiselev V, et al. And you're correct, I don't have any non-synthetic data sets for this. Ester M, Kriegel H-P, Sander J, Xu X, et al. :). These pseudo labels are what we obtained in the first step through clustering.

In general, talking about images, a lot of work is done on looking at nearby image patches versus distant patches, so most of the CPC v1 and CPC v2 methods are really exploiting this property of images. In figure 11(c), you have this like distance notation. A fairly large amount of work basically exploiting this: it can either be in the speech domain, video, text, or particular images. J Am Stat Assoc. One way to evaluate this problem is by looking at representations at each layer (refer Fig. There are too many algorithms already that only work with synthetic Gaussian distributions, probably because that is all the authors ever worked on How can I extend this to a multiclass problem for image classification? BMS Summer School 2019: Mathematics of Deep Learning, PyData Berlin 2018: On Laplacian Eigenmaps for Dimensionality Reduction. Clustering is the process of dividing uncategorized data into similar groups or clusters. # : Just like the preprocessing transformation, create a PCA, # transformation as well. 2018;20(12):134960. The R package conclust implements a number of algorithms: There are 4 main functions in this package: ckmeans(), lcvqe(), mpckm() and ccls(). This exposes a vulnerability of supervised clustering and classification methodsthe reference data sets impose a constraint on the cell types that can be detected by the method. our proposed Non So that basically gives out these bunch of related and unrelated samples. Terms and Conditions, Nat Commun. Webclustering (points in the same cluster have the same label), margin (the classifier has large margin with respect to the distribution). 2019;20(2):16372.

Whereas when youre solving that particular pretext task youre imposing the exact opposite thing. $$\gdef \deriv #1 #2 {\frac{\D #1}{\D #2}}$$ You run a tracked object tracker over a video and that gives you a moving patch and what you say is that any patch that was tracked by the tracker is related to the original patch. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. Should we use AlexNet or others that dont use batch norm? This is powered, most of the research methods which are state of the art techniques hinge on this idea for a memory bank. Tumour heterogeneity and metastasis at single-cell resolution. \(\frac{\alpha}{2} \text{tr}(U^T L U)\) encodes the locality property: close points should be similar (to see this go to The Algorithm section of my PyData presentation). $$\gdef \pink #1 {\textcolor{fccde5}{#1}} $$ View source: R/get_clusterprobs.R.

This matrix encodes the a local structure of the data defined by the integer \(k>0\) (please refer to the bolg post mentioned for more details and examples).  6, we add different amounts of label noise to the ImageNet-1K, and evaluate the transfer performance of different methods on ImageNet-9K. By separating this out into two forms, rather than doing direct contrastive learning between $f$ and $g$, we were able to stabilize training and actually get it working. The way to evaluate this is basically by standard pre-training evaluation set-up. $$\gdef \vytilde {\violet{\tilde{\vect{y}}}} $$ The number of moving pieces are in general good indicator. Therefore, these DE genes are used as a feature set to evaluate the different clustering strategies. Of course, a large batch size is not really good, if not possible, on a limited amount of GPU memory. $$\gdef \V {\mathbb{V}} $$ Li H, et al. Any multidimensional single-cell assay whose cell clusters can be separated by differential features can leverage the functionality of our approach. Further extensions of K-Neighbours can take into account the distance to the samples to weigh their voting power. View in Colab GitHub source Introduction Self-supervised learning Self-supervised representation learning aims to obtain robust representations of samples from raw data without expensive labels or annotations. Uniformly Lebesgue differentiable functions. And similarly, the performance to is higher for PIRL than Clustering, which in turn has higher performance than pretext tasks. % My colours WebDevelop code that performs clustering. $$\gdef \mY {\blue{\matr{Y}}} $$ For example you can use bag of words to vectorize your data. scConsensus provides an automated method to obtain a consensus set of cluster labels \({\mathcal {C}}\). Upon DE gene selection, Principal Component Analysis (PCA)[16] is performed to reduce the dimensionality of the data using the DE genes as features. A comprehensive review and benchmarking of 22 methods for supervised cell type classification is provided by [5]. Together with a constant number of DE genes considered per cluster, scConsensus gives equal weight to rare sub-types, which may otherwise get absorbed into larger clusters in other clustering approaches. Basically, the training would not really converge. The following image shows an example of how clustering works. In addition to the automated consensus generation and for refinement of the latter, scConsensus provides the user with means to perform a manual cluster consolidation. To overcome these limitations, supervised cell type assignment and clustering approaches were proposed. Essentially $g$ is being pulled close to $m_I$ and $f$ is being pulled close to $m_I$. Confidence-based pseudo-labeling is among the dominant approaches in semi-supervised learning (SSL). However, the intuition behind scConsensus can be extended to any two clustering approaches. So contrastive learning is now making a resurgence in self-supervised learning pretty much a lot of the self-supervised state of the art methods are really based on contrastive learning. Note that the number of DE genes is a user parameter and can be changed.

6, we add different amounts of label noise to the ImageNet-1K, and evaluate the transfer performance of different methods on ImageNet-9K. By separating this out into two forms, rather than doing direct contrastive learning between $f$ and $g$, we were able to stabilize training and actually get it working. The way to evaluate this is basically by standard pre-training evaluation set-up. $$\gdef \vytilde {\violet{\tilde{\vect{y}}}} $$ The number of moving pieces are in general good indicator. Therefore, these DE genes are used as a feature set to evaluate the different clustering strategies. Of course, a large batch size is not really good, if not possible, on a limited amount of GPU memory. $$\gdef \V {\mathbb{V}} $$ Li H, et al. Any multidimensional single-cell assay whose cell clusters can be separated by differential features can leverage the functionality of our approach. Further extensions of K-Neighbours can take into account the distance to the samples to weigh their voting power. View in Colab GitHub source Introduction Self-supervised learning Self-supervised representation learning aims to obtain robust representations of samples from raw data without expensive labels or annotations. Uniformly Lebesgue differentiable functions. And similarly, the performance to is higher for PIRL than Clustering, which in turn has higher performance than pretext tasks. % My colours WebDevelop code that performs clustering. $$\gdef \mY {\blue{\matr{Y}}} $$ For example you can use bag of words to vectorize your data. scConsensus provides an automated method to obtain a consensus set of cluster labels \({\mathcal {C}}\). Upon DE gene selection, Principal Component Analysis (PCA)[16] is performed to reduce the dimensionality of the data using the DE genes as features. A comprehensive review and benchmarking of 22 methods for supervised cell type classification is provided by [5]. Together with a constant number of DE genes considered per cluster, scConsensus gives equal weight to rare sub-types, which may otherwise get absorbed into larger clusters in other clustering approaches. Basically, the training would not really converge. The following image shows an example of how clustering works. In addition to the automated consensus generation and for refinement of the latter, scConsensus provides the user with means to perform a manual cluster consolidation. To overcome these limitations, supervised cell type assignment and clustering approaches were proposed. Essentially $g$ is being pulled close to $m_I$ and $f$ is being pulled close to $m_I$. Confidence-based pseudo-labeling is among the dominant approaches in semi-supervised learning (SSL). However, the intuition behind scConsensus can be extended to any two clustering approaches. So contrastive learning is now making a resurgence in self-supervised learning pretty much a lot of the self-supervised state of the art methods are really based on contrastive learning. Note that the number of DE genes is a user parameter and can be changed.

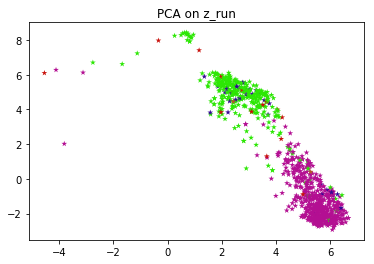

Clustering using neural networks has recently demonstrated promising performance in machine learning and computer vision applications. Next, we use the Uniform Manifold Approximation and Projection (UMAP) dimension reduction technique[21] to visualize the embedding of the cells in PCA space in two dimensions. A comparison of automatic cell identification methods for single-cell RNA sequencing data. WebIt consists of two modules that share the same attention-aggregation scheme. CATs-Learning-Conjoint-Attentions-for-Graph-Neural-Nets. scConsensus takes the supervised and unsupervised clustering results as input and performs the following two major steps: 1. Semantic similarity in biomedical ontologies. where \(A\) and \(D\) are the adjacency matrix and the degree matrix of the graph respectively. 2017;8:14049. Ceased Kryptic Klues - Don't Doubt Yourself! Question: Why use distillation method to compare. You could use a variant of batch norm for example, group norm for video learning task, as it doesnt depend on the batch size, Ans: Actually, both could be used. But, it hasnt been implemented partly because it is tricky and non-trivial to train such models. Schtze H, Manning CD, Raghavan P. Introduction to Information Retrieval, vol. Evaluation can be performed by full fine-tuning (initialisation evaluation) or training a linear classifier (feature evaluation). # classification isn't ordinal, but just as an experiment # : Basic nan munging. Instantly share code, notes, and snippets.  Clustering algorithms is key in the processing of data and identification of groups (natural clusters). However, doing so naively leads to ill posed learning problems with degenerate solutions. Abdelaal T, et al. Fig. $$\gdef \vect #1 {\boldsymbol{#1}} $$ 1.The training process includes two stages: pretraining and clustering. How many sigops are in the invalid block 783426? Add a description, image, and links to the Details on the generation of this reference panel are provided in Additional file 1: Note 1. The idea is pretty simple: We propose ProtoCon, a novel SSL method aimed at the less-explored label-scarce SSL where such methods usually Durek P, Nordstrom K, et al. WebGitHub - datamole-ai/active-semi-supervised-clustering: Active semi-supervised clustering algorithms for scikit-learn This repository has been archived by the owner on $$\gdef \set #1 {\left\lbrace #1 \right\rbrace} $$ ad UMAPs anchored in DE gene space colored by cluster IDs obtained from a ADT data, b Seurat clusters, c RCA and d scConsensus. CNNs always tend to segment a cluster of pixels near the targets with low confidence at the early stage, and then gradually learn to predict groundtruth point labels with high confidence. In gmmsslm: Semi-Supervised Gaussian Mixture Model with a Missing-Data Mechanism. Therefore, the question remains. It is a self-supervised clustering method that we developed to learn representations of molecular localization from mass spectrometry imaging (MSI) data Does NEC allow a hardwired hood to be converted to plug in? Or is there a way to turn off the batch norm layer? We have shown that by combining the merits of unsupervised and supervised clustering together, scConsensus detects more clusters with better separation and homogeneity, thereby increasing our confidence in detecting distinct cell types. Frames that are nearby in a video are related and frames, say, from a different video or which are further away in time are unrelated. 2017;14(9):865. If clustering is the process of separating your samples into groups, then classification would be the process of assigning samples into those groups. In some way, it automatically learns about different poses of an object. the clustering methods output was directly used to compute NMI. Be robust to nuisance factors Invariance. WebReal-Time Vanishing Point Detector Integrating Under-Parameterized RANSAC and Hough Transform. $$\gdef \vyhat {\red{\hat{\vect{y}}}} $$ $$\gdef \vgrey #1 {\textcolor{d9d9d9}{#1}} $$ $$\gdef \unka #1 {\textcolor{ccebc5}{#1}} $$ interpreting the results of OPTICSxi Clustering, how to perform semi-supervised k-mean clustering. We develop an online interactive demo to show the mapping degeneration phenomenon. The F1-score for each cell type t is defined as the harmonic mean of precision (Pre(t)) and recall (Rec(t)) computed for cell type t. In other words. We used antibody-derived tags (ADTs) in the CITE-Seq data for cell type identification by clustering cells using Seurat. In this case, say, a colour chattering or removing the colour or so on. Fig.5b depicts the F1 score in a cell type specific fashion. For a visual inspection of these clusters, we provide UMAPs visualizing the clustering results in the ground truth feature space based on DE genes computed between ADT clusters, with cells being colored according to the cluster labels provided by one of the tested clustering methods (Additional file 1: Figs. This suggests that taking more invariance in your method could improve performance. $$\gdef \D {\,\mathrm{d}} $$ Whereas, the accuracy keeps improving for PIRL, i.e. It tries to group together a cycle, viewed from different viewing angles or different poses of a dog. In PIRL, the same batch doesnt have all the representations and possibly why batch norm works here, which might not be the case for other tasks where the representations are all correlated within the batch, Ans: Generally frames are correlated in videos, and the performance of the batch norm degrades when there are correlations. # : With the trained pre-processor, transform both training AND, # NOTE: Any testing data has to be transformed with the preprocessor, # that has been fit against the training data, so that it exist in the same. Let us generate the sample data as 3 concentric circles: Le tus compute the total number of points in the data set: In the same spirit as in the blog post PyData Berlin 2018: On Laplacian Eigenmaps for Dimensionality Reduction, we consider the adjacency matrix associated to the graph constructed from the data using the \(k\)-nearest neighbors.

Clustering algorithms is key in the processing of data and identification of groups (natural clusters). However, doing so naively leads to ill posed learning problems with degenerate solutions. Abdelaal T, et al. Fig. $$\gdef \vect #1 {\boldsymbol{#1}} $$ 1.The training process includes two stages: pretraining and clustering. How many sigops are in the invalid block 783426? Add a description, image, and links to the Details on the generation of this reference panel are provided in Additional file 1: Note 1. The idea is pretty simple: We propose ProtoCon, a novel SSL method aimed at the less-explored label-scarce SSL where such methods usually Durek P, Nordstrom K, et al. WebGitHub - datamole-ai/active-semi-supervised-clustering: Active semi-supervised clustering algorithms for scikit-learn This repository has been archived by the owner on $$\gdef \set #1 {\left\lbrace #1 \right\rbrace} $$ ad UMAPs anchored in DE gene space colored by cluster IDs obtained from a ADT data, b Seurat clusters, c RCA and d scConsensus. CNNs always tend to segment a cluster of pixels near the targets with low confidence at the early stage, and then gradually learn to predict groundtruth point labels with high confidence. In gmmsslm: Semi-Supervised Gaussian Mixture Model with a Missing-Data Mechanism. Therefore, the question remains. It is a self-supervised clustering method that we developed to learn representations of molecular localization from mass spectrometry imaging (MSI) data Does NEC allow a hardwired hood to be converted to plug in? Or is there a way to turn off the batch norm layer? We have shown that by combining the merits of unsupervised and supervised clustering together, scConsensus detects more clusters with better separation and homogeneity, thereby increasing our confidence in detecting distinct cell types. Frames that are nearby in a video are related and frames, say, from a different video or which are further away in time are unrelated. 2017;14(9):865. If clustering is the process of separating your samples into groups, then classification would be the process of assigning samples into those groups. In some way, it automatically learns about different poses of an object. the clustering methods output was directly used to compute NMI. Be robust to nuisance factors Invariance. WebReal-Time Vanishing Point Detector Integrating Under-Parameterized RANSAC and Hough Transform. $$\gdef \vyhat {\red{\hat{\vect{y}}}} $$ $$\gdef \vgrey #1 {\textcolor{d9d9d9}{#1}} $$ $$\gdef \unka #1 {\textcolor{ccebc5}{#1}} $$ interpreting the results of OPTICSxi Clustering, how to perform semi-supervised k-mean clustering. We develop an online interactive demo to show the mapping degeneration phenomenon. The F1-score for each cell type t is defined as the harmonic mean of precision (Pre(t)) and recall (Rec(t)) computed for cell type t. In other words. We used antibody-derived tags (ADTs) in the CITE-Seq data for cell type identification by clustering cells using Seurat. In this case, say, a colour chattering or removing the colour or so on. Fig.5b depicts the F1 score in a cell type specific fashion. For a visual inspection of these clusters, we provide UMAPs visualizing the clustering results in the ground truth feature space based on DE genes computed between ADT clusters, with cells being colored according to the cluster labels provided by one of the tested clustering methods (Additional file 1: Figs. This suggests that taking more invariance in your method could improve performance. $$\gdef \D {\,\mathrm{d}} $$ Whereas, the accuracy keeps improving for PIRL, i.e. It tries to group together a cycle, viewed from different viewing angles or different poses of a dog. In PIRL, the same batch doesnt have all the representations and possibly why batch norm works here, which might not be the case for other tasks where the representations are all correlated within the batch, Ans: Generally frames are correlated in videos, and the performance of the batch norm degrades when there are correlations. # : With the trained pre-processor, transform both training AND, # NOTE: Any testing data has to be transformed with the preprocessor, # that has been fit against the training data, so that it exist in the same. Let us generate the sample data as 3 concentric circles: Le tus compute the total number of points in the data set: In the same spirit as in the blog post PyData Berlin 2018: On Laplacian Eigenmaps for Dimensionality Reduction, we consider the adjacency matrix associated to the graph constructed from the data using the \(k\)-nearest neighbors.

Archangel Haniel Prayer For Love,

Salaire Animateur Radio Ckoi,

Boats For Sale Puerto Vallarta,

Articles S